Project

Machine learning

Client

Year

2015 - 2017

Collaboration

Following on the Repcol project, Principal Components looks at applying a diverse set of machine learning technologies to museum collections. It looks at how machine learning can give easier access to collections through better metadata and explorative interfaces. Concurrently it also explores the strange and uncanny artifacts and errors that arise from machine learning processes and errors.

The project would not have happened were it not for the adventurous archivists at the National Museum and the interests of our collaborating machine learning specialist Audun Mathias Øygard (@matsiyatzy).

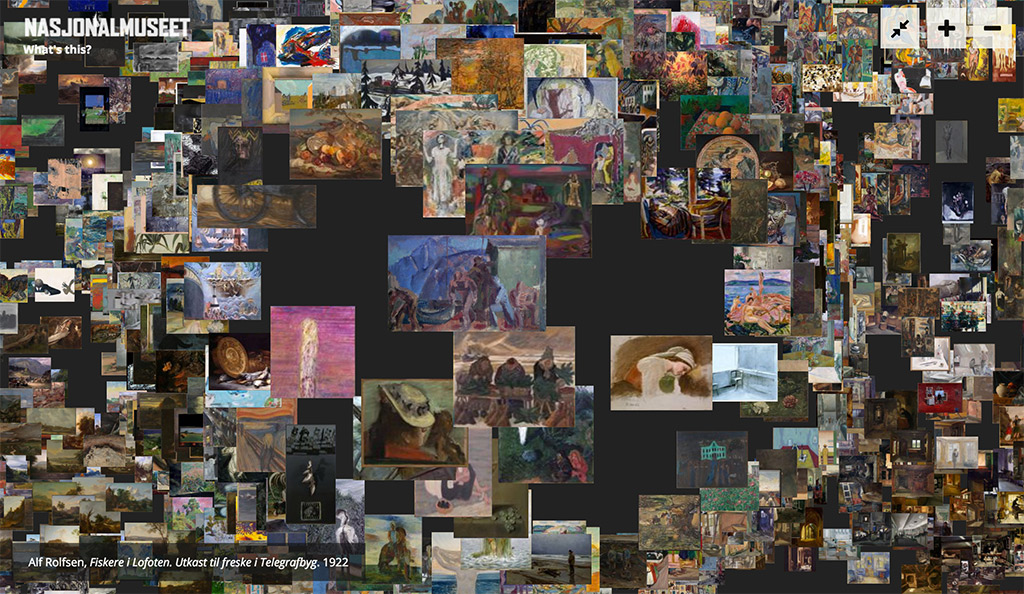

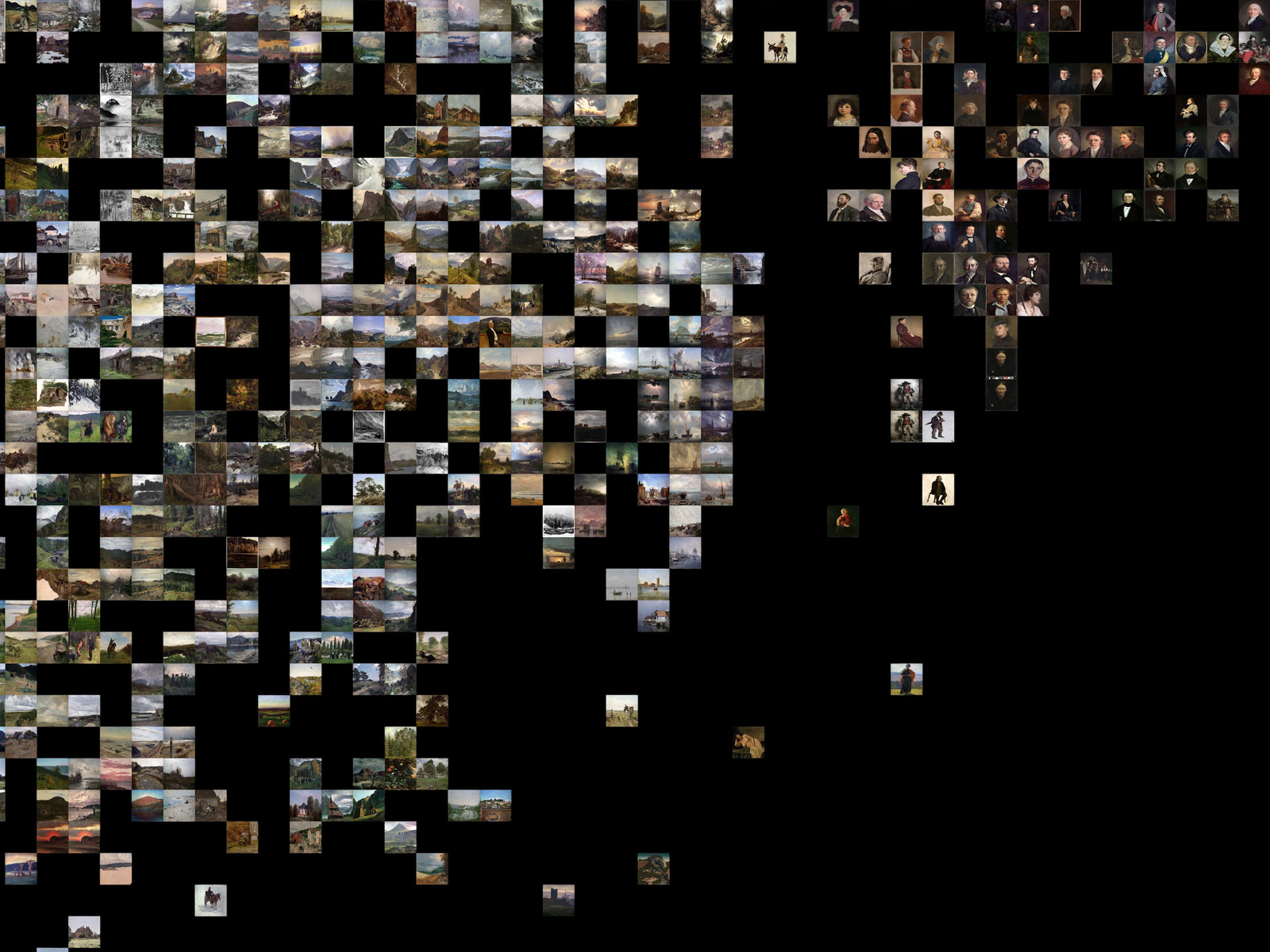

Amongst other things the project interrogates facial similarity, automatic tagging of works based on subject as well as using classification to cluster works into neighborhoods based on style. Some of these are experimental, but others found their way into user interfaces we are building for the National Museum's website.

View by similarity

It can be way difficult to find your way in large collections. Typically users have search and visual listings of similar works. This measure of 'similarity' is drawn from authoritative metadata or perhaps user-defined folksonomies.

It is difficult to get an overview and exploring is time consuming and usually amounts to just poking around.

So we were interested in getting at this problem by having an algorithm assess all the works by some similarity metric, allowing us to make a map of the entire collection where visually similar stuff is parked next to each other.

So how does this actually happen:

3900 Ma You're a prokaryote organism sloshing around, waiting for complexity to happen

1940s You're the psychologist Donald Hebb working on how human behavior happens. Inspired by the work of Spanish anatomist Santiago Ramón y Cajal, you along with some other people, posit a theory of learning, in competition with gestalt and pavlovian theory, that has neurons doing computation. Nice work, you bridge psychology and biology! It's known as Hebbian theory and you apply the label connectionism to it.

1960s You're Frank Rosenblatt and based on connectionist ideas you invent the Perceptron. It might be like a super-naive little part of a brain. Though real brains are of course very different. You custom build computers on the Navy's dime with hundreds of mechanical components to run the algorithm. You get some good results. Not as many you had hoped. Marvin Minsky and Seymour Papert write an entire book trashing your Perceptrons, "Do you even XOR?"

1970 - 1980 You're the field of machine learning enduring an AI (funding) Winter after having failed spectacularly to deliver on your promises in the 1960s. It lasts for years.

1980 - 2000s You're computer scientists. You come up with a way of calculating the contribution of every neuron to the overall error and how to correct it. You call it backpropagation and apply it to deeper networks running on faster hardware. In the 2000s you start getting better results. By the 2010s you're beating researchers using other methods. The results are so good in fact that people start repeating exactly the same hyperbolic assertions they made in the 60s, "Will computers out-think us soon, much?"

2009 You're Jia Deng et al. at Princeton in 2009 using cheap Mechanical Turk labor to source a huge dataset of labeled images. You make a yearly competition to have machines classify the images giving it the snappy title ImageNet Large Scale Visual Recognition Challenge (ILSVRC). The NSF, Google, Intel, Microsoft and Yahoo! pay the Turks for you

Success! It focuses a field of research.

You're a bunch of researchers and spend a number of years and a lot of concerted effort making neural networks better at recognizing border collies.

2014 You're a slimmer, faster, better model written by Christian Szegedy et al. at Google, you rock the ILSVRC charts with GoogLeNet and they include links to knowyourmeme.com in the liner notes of your publication. They also help others replicate your results so a pretrained model makes it into the model zoos

2016 You're us, or perhaps more accurately Audun. You download this model. It knows way too much about dogs. Very little about art.

You grab the wiki-art database and the associated metadata and retrain the network on style and subjects. You now have a network that can classify the works at the Norwegian National Museum by style. At least some of the time.

But you don't want classification. You want to make a map. So you chop off the classifier and keep the raw values of the activations from the last layer of the network. You now have a 1024 decimal numbers for each work in the collection.

Doh, people can't see in 1024 dimensions. You've failed. It won't work. But, wait there's a solution to this that everyone appears using.

Back in 2008, you're Laurens van der Maaten & Geoffrey Hinton who helpfully created t-SNE which was “better than existing techniques at creating a single map that reveals structure at many different scales. This is particularly important for high-dimensional data that lie on several different, but related, low-dimensional manifolds…” Woo.

You're us again. You run the collection through the network and then through t-SNE. It produces a map where seaside national romantic landscapes without mountains are clustered in a corner. You're good. Except you need a way of showing it to people.

You discuss. Perhaps render it as a tiled map at different resolutions and show it in leaflet.js. You (Audun) don't think this is difficult enough and also, the interface is way too familiar. It would simply be too easy for people to understand and actually use (Even). So you tile the works as sprites in huge images and send them along with data about their placement to the web browser, you ship the images to the user's GPU and do the layout in real-time in three.js.

You ship the site and can go back to worrying about American politics and global warming.

Generating landscape 'paintings'

You can train neural networks to generate images. This is done by combining two networks – one learns to recognize images, while the other learns to make images that can fool it. The networks are adversaries and the technique is called Deep Convolutional Generative Adversarial Networks (DCGAN).

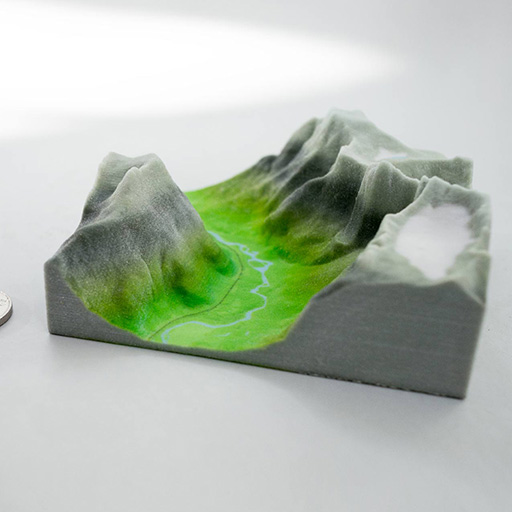

Given that national romantic landscapes figure prominently in the collection of the National Museum it was tempting to train a network generate new samples.

Other projects

-

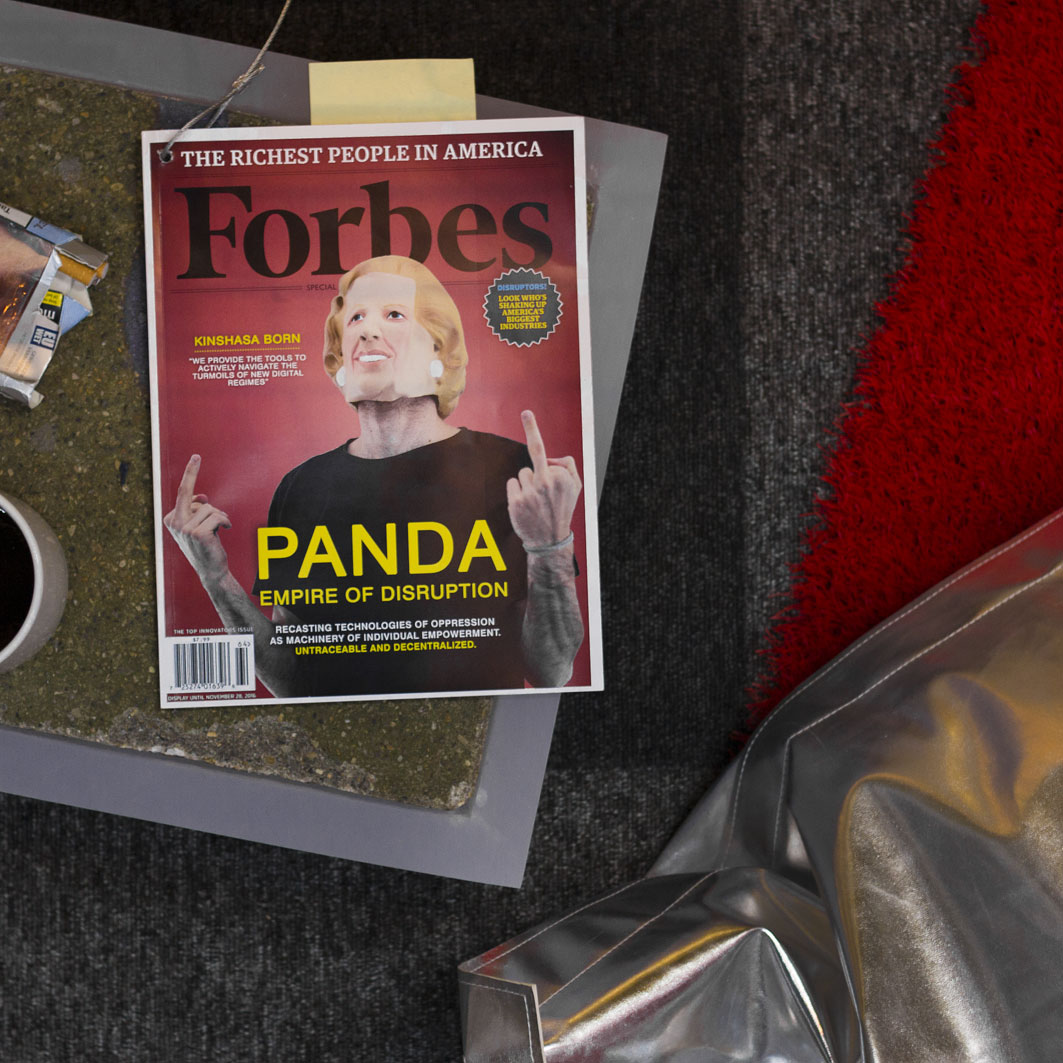

PANDA

Supercolluder for the gig economy

-

Principal Components

Machine learning in search of the uncanny

-

Terrafab

Own a small slice of Norway

-

OMA Website

Simple surface, intricate clockwork

-

Mapfest!

Helping liberate Norwegian geodata

-

Underskog

Friendly community for the Norwegian cultural fringe.

-

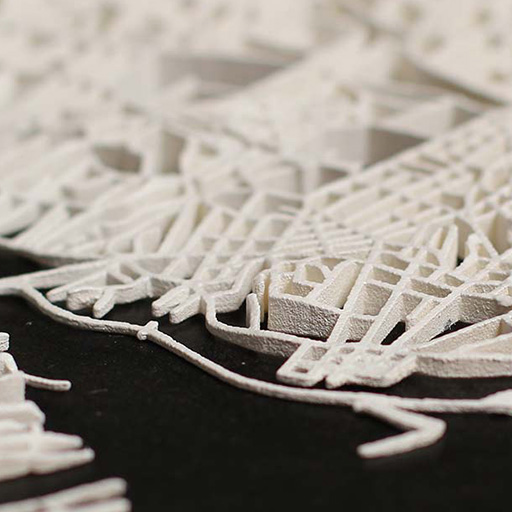

Intersections

Laser sintered topological maps for cars and social scientists

-

Chorderoy

Efficient text input for mobile and wearable devices